Table of Contents

Understanding the mechanics of how search engines interact with your website is crucial for optimizing your online presence. One of the fundamental tools in guiding search engines is the robots.txt file. In this article, we will explore what a robots.txt file is, how it works, and why it’s vital for SEO.

What is a robots.txt file?

1. Definition:

- A robots.txt file is a simple text file placed in the root directory of your website. It provides instructions to search engine crawlers (also known as robots or spiders) on how to interact with your site's pages.

2. Purpose:

- The primary purpose of a robots.txt file is to manage crawler traffic to your site, preventing servers from becoming overwhelmed by requests, and to control the indexing of certain pages to avoid duplicate content or protect sensitive information.

How a robots.txt file works

1. Directives:

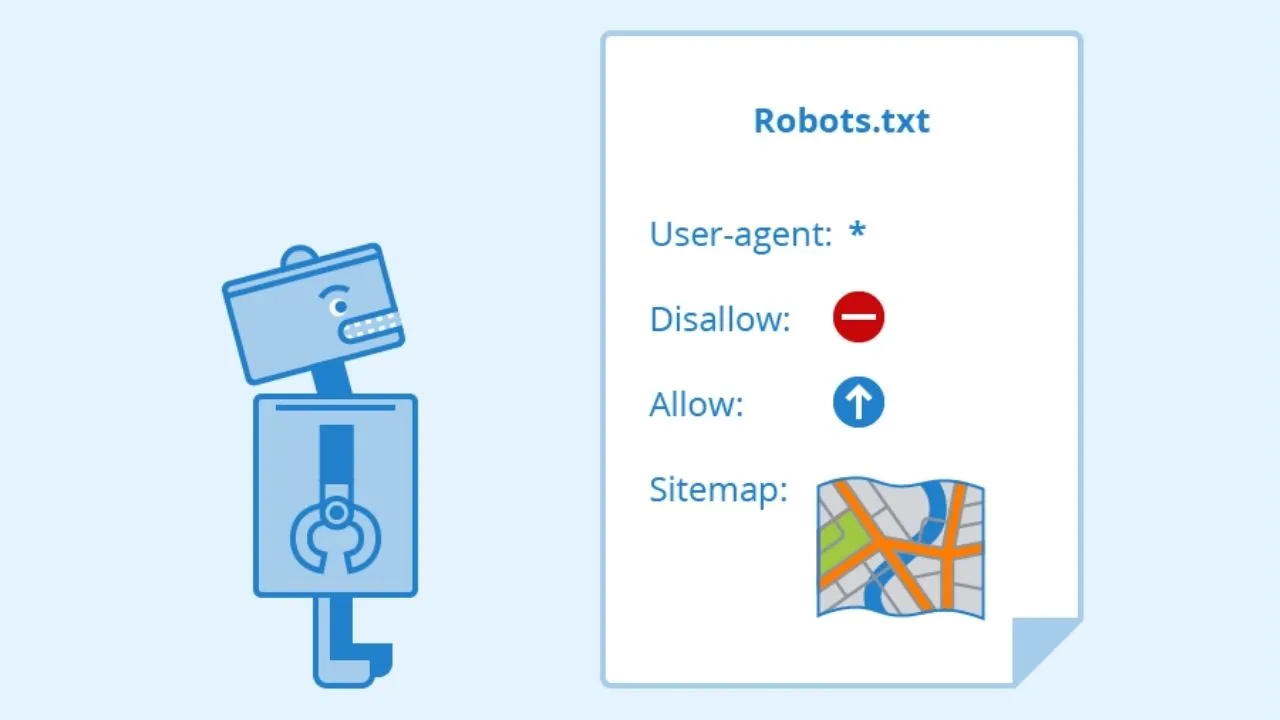

- A robots.txt file uses specific directives to communicate with search engine crawlers. These directives include:

- User-agent: Specifies which crawler the directives apply to (e.g., Googlebot, Bingbot).

- Disallow: Instructs the crawler not to access specific pages or directories.

- Allow: Overrides a disallow directive, permitting access to a specific page within a disallowed directory.

- Sitemap: Provides the location of the site's XML sitemap to help search engines find all relevant pages.

2. Example of a robots.txt file:

Disallow: /private/

Allow: /private/public-page.html

Sitemap: http://www.example.com/sitemap.xml

- In this example:

- All crawlers are instructed not to access the /private/ directory.

- An exception is made for /private/public-page.html, which is allowed to be crawled.

- The location of the XML sitemap is specified to assist search engines in finding all relevant pages.

Why a robots.txt file is important for SEO

1. Controlling Crawl Budget:

- A well-configured robots.txt file helps manage your crawl budget by directing search engines to prioritize important pages, thus improving crawl efficiency and ensuring critical content is indexed.

2. Preventing Duplicate Content:

- By disallowing the indexing of duplicate or low-value pages, a robots.txt file helps prevent duplicate content issues that can negatively impact your SEO rankings.

3. Protecting Sensitive Information:

- The robots.txt file can be used to block search engines from accessing sensitive information or areas of your site that are under development, maintaining your site's security and integrity.

4. Enhancing Site Performance:

- By preventing search engines from crawling unnecessary pages, a robots.txt file can reduce server load, enhancing site performance and user experience.

How to create and implement a robots.txt file

1. Creating a robots.txt file:

- A robots.txt file can be created using any text editor. It should be saved with the name "robots.txt" and placed in the root directory of your website.

2. Common directives:

- Disallow All Crawlers from a Directory:

User-agent: *

Disallow: /directory/ - Allow Specific Pages within a Disallowed Directory:

User-agent: *

Disallow: /directory/

Allow: /directory/specific-page.html - Specifying the Sitemap Location:

Sitemap: http://www.example.com/sitemap.xml

3. Uploading the robots.txt file:

- The robots.txt file should be uploaded to the root directory of your website (e.g., http://www.example.com/robots.txt).

4. Testing the robots.txt file:

- Use tools like Google Search Console’s robots.txt Tester to ensure your file is correctly configured and does not block important pages inadvertently.

Best practices for managing a robots.txt file

1. Keep it Simple:

- Use clear and concise directives to avoid confusion. Complex rules can lead to errors and unintended blocking of important pages.

2. Regularly Review and Update:

- Regularly review and update your robots.txt file to reflect changes in your site's structure and content. Ensure that new important pages are not inadvertently blocked.

3. Monitor Crawl Stats:

- Use tools like Google Search Console to monitor how search engines interact with your site. This helps identify any issues related to your robots.txt file.

4. Avoid Blocking CSS and JavaScript:

- Do not block CSS and JavaScript files unless necessary. Search engines need access to these files to render and understand your pages correctly.

Conclusion

A robots.txt file is a powerful tool for managing how search engines interact with your website. By understanding its importance and implementing it correctly, you can control your crawl budget, prevent duplicate content, and protect sensitive information, all of which contribute to better SEO performance.

Whether you are optimizing your site’s structure or managing sensitive areas, a well-configured robots.txt file is essential for maintaining an efficient and effective online presence.

Elevate Your SEO Strategy with Expert Robots.txt Management!

Is your website’s search performance falling short? At Softhat IT Solutions, we specialize in optimizing robots.txt files to ensure your site is effectively crawled and indexed by search engines. Contact us today to learn how our SEO services can enhance your site’s visibility and performance.